Others - Top Interview Questions(EASY) - (9/9) : Java

Others

Here are some other questions that do not fit in other categories.

We recommend:

Number of 1 Bits

Valid Parentheses.

191. Number of 1 Bits

leetcode.com/problems/number-of-1-bits/

Write a function that takes an unsigned integer and returns the number of '1' bits it has (also known as the Hamming weight).

Note:

- Note that in some languages such as Java, there is no unsigned integer type. In this case, the input will be given as a signed integer type. It should not affect your implementation, as the integer's internal binary representation is the same, whether it is signed or unsigned.

- In Java, the compiler represents the signed integers using 2's complement notation. Therefore, in Example 3 above, the input represents the signed integer. -3.

Follow up: If this function is called many times, how would you optimize it?

Example 1:

Input: n = 00000000000000000000000000001011 Output: 3 Explanation: The input binary string 00000000000000000000000000001011 has a total of three '1' bits.

Example 2:

Input: n = 00000000000000000000000010000000 Output: 1 Explanation: The input binary string 00000000000000000000000010000000 has a total of one '1' bit.

Example 3:

Input: n = 11111111111111111111111111111101 Output: 31 Explanation: The input binary string 11111111111111111111111111111101 has a total of thirty one '1' bits.

Constraints:

- The input must be a binary string of length 32

Solution

Approach #1 (Loop and Flip) [Accepted]

** Algorithm**

The solution is straight-forward. We check each of the 3232 bits of the number. If the bit is 11, we add one to the number of 11-bits.

We can check the i^{th}ith bit of a number using a bit mask. We start with a mask m=1m=1, because the binary representation of 11 is,

0000 0000 0000 0000 0000 0000 0000 000100000000000000000000000000000001 Clearly, a logical AND between any number and the mask 11 gives us the least significant bit of this number. To check the next bit, we shift the mask to the left by one.

0000 0000 0000 0000 0000 0000 0000 001000000000000000000000000000000010

And so on.

Java

public int hammingWeight(int n) {

int bits = 0;

int mask = 1;

for (int i = 0; i < 32; i++) {

if ((n & mask) != 0) {

bits++;

}

mask <<= 1;

}

return bits;

}

Complexity Analysis

The run time depends on the number of bits in nn. Because nn in this piece of code is a 32-bit integer, the time complexity is O(1)O(1).

The space complexity is O(1)O(1), since no additional space is allocated.

Approach #2 (Bit Manipulation Trick) [Accepted]

Algorithm

We can make the previous algorithm simpler and a little faster. Instead of checking every bit of the number, we repeatedly flip the least-significant 11-bit of the number to 00, and add 11 to the sum. As soon as the number becomes 00, we know that it does not have any more 11-bits, and we return the sum.

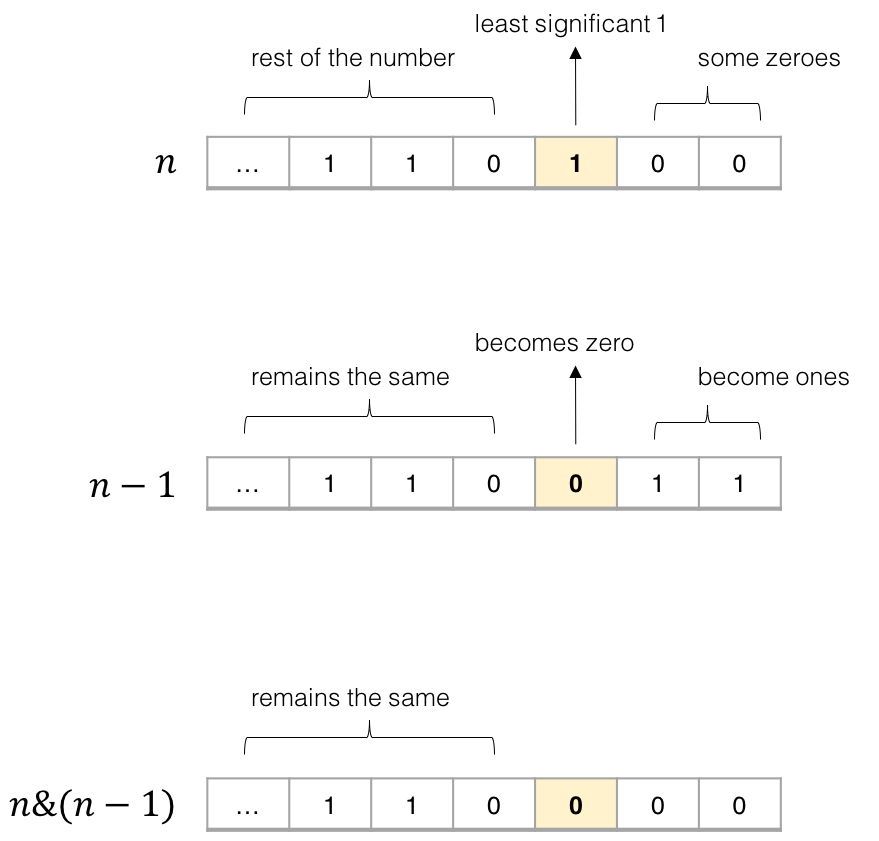

The key idea here is to realize that for any number nn, doing a bit-wise AND of nn and n - 1n−1 flips the least-significant 11-bit in nn to 00. Why? Consider the binary representations of nn and n - 1n−1.

Figure 1. AND-ing nn and n-1n−1 flips the least-significant 11-bit to 0.

In the binary representation, the least significant 11-bit in nn always corresponds to a 00-bit in n - 1n−1. Therefore, anding the two numbers nn and n - 1n−1 always flips the least significant 11-bit in nn to 00, and keeps all other bits the same.

Using this trick, the code becomes very simple.

Java

public int hammingWeight(int n) {

int sum = 0;

while (n != 0) {

sum++;

n &= (n - 1);

}

return sum;

}

Complexity Analysis

The run time depends on the number of 11-bits in nn. In the worst case, all bits in nn are 11-bits. In case of a 32-bit integer, the run time is O(1)O(1).

The space complexity is O(1)O(1), since no additional space is allocated.

Analysis written by: @noran.

이런 코드도..

public class Solution {

// you need to treat n as an unsigned value

public int hammingWeight(int n) {

if (n == 0)

return 0;

int count = 0;

int x = n;

while (x != 0)

{

int lastBit = (x & 1);

//System.out.print(lastBit);

if (lastBit == 1)

count++;

x = (x >>> 1);

}

return count;

}

}

461. Hamming Distance

leetcode.com/problems/hamming-distance/

The Hamming distance between two integers is the number of positions at which the corresponding bits are different.

Given two integers x and y, calculate the Hamming distance.

Note:

0 ≤ x, y < 231.

Example:

Input: x = 1, y = 4 Output: 2 Explanation: 1 (0 0 0 1) 4 (0 1 0 0) ↑ ↑ The above arrows point to positions where the corresponding bits are different.

Solution

Intuition

Hamming distance is an interesting metric that is widely applied in several domains, e.g. in coding theory for error detection, in information theory for quantifying the difference between strings.

The Hamming distance between two integer numbers is the number of positions at which the corresponding bits are different.

Given the above definition, it might remind one of the bit operation called XOR which outputs 1 if and only if the input bits are different.

As a result, in order to measure the hamming distance between x and y, we can first do x XOR y operation, then we simply count the number of bit 1 in the result of XOR operation.

We now convert the original problem into a bit-counting problem. There are several ways to count the bits though, as we will discuss in the following sections.

Approach 1: Built-in BitCounting Functions

Intuition

First of all, let us talk of the elephant in the room. As one can imagine, we have various built-in functions that could count the bit 1 for us, in all (or at least most of) programming languages. So if this is the task that one is asked in a project, then one should probably just go for it, rather than reinventing the wheel. We given two examples in the following.

Now, since this is a LeetCode problem, some of you would argue that using the built-in function is like "implementing a LinkedList with LinkedList", which we fully second as well. So no worry, we will see later some fun hand-crafted algorithms for bit counting.

Algorithm

class Solution {

public int hammingDistance(int x, int y) {

return Integer.bitCount(x ^ y);

}

}

Complexity Analysis

-

Time Complexity: \mathcal{O}(1)O(1)

-

There are two operations in the algorithm. First, we do the XOR operation which takes a constant time.

-

Then, we call the built-in bitCount function. In the worst scenario, the function would take \mathcal{O}(k)O(k) time where kk is the number of bits for an integer number. Since the Integer type is of fixed size in both Python and Java, the overall time complexity of the algorithm becomes constant, regardless the input numbers.

-

-

Space Complexity: \mathcal{O}(1)O(1), a temporary memory of constant size is consumed, to hold the result of XOR operation.

- We assume that the built-in function also takes a constant space.

- We assume that the built-in function also takes a constant space.

Approach 2: Bit Shift

Intuition

In order to count the number of bit 1, we could shift each of the bit to either the leftmost or the rightmost position and then check if the bit is one or not.

More precisely, we should do the logical shift where zeros are shifted in to replace the discarded bits.

Here we adopt the right shift operation, where each bit would has its turn to be shifted to the rightmost position. Once shifted, we then check if the rightmost bit is one, which we can use either the modulo operation (i.e. i % 2) or the bit AND operation (i.e. i & 1). Both operations would mask out the rest of the bits other than the rightmost bit.

Algorithm

class Solution {

public int hammingDistance(int x, int y) {

int xor = x ^ y;

int distance = 0;

while (xor != 0) {

if (xor % 2 == 1)

distance += 1;

xor = xor >> 1;

}

return distance;

}

}

Complexity Analysis

-

Time Complexity: \mathcal{O}(1)O(1), since the Integer is of fixed size in Python and Java, the algorithm takes a constant time. For an Integer of 32 bit, the algorithm would take at most 32 iterations.

-

Space Complexity: \mathcal{O}(1)O(1), a constant size of memory is used, regardless the input.

Approach 3: Brian Kernighan's Algorithm

Intuition

In the above approach, one might wonder that "rather than shifting the bits one by one, is there a faster way to count the bits of one ?". And the answer is yes.

If we is asked to count the bits of one, as humans, rather than mechanically examining each bit, we could skip the bits of zero in between the bits of one, e.g. 10001000.

In the above example, after encountering the first bit of one at the rightmost position, it would be more efficient if we just jump at the next bit of one, skipping all the zeros in between.

This is the basic idea of the Brian Kernighan's bit counting algorithm, which applies some smart bit and arithmetic operations to clear the rightmost bit of one. Here is the secret recipe.

When we do AND bit operation between number and number-1, the rightmost bit of one in the original number would be cleared.

Based on the above idea, we then can count the bits of one for the input of 10001000 in 2 iterations, rather than 8.

Algorithm

Note, according to the online book of Bit Twiddling Hacks, the algorithm was published as an exercise in 1988, in the book of the C Programming Language 2nd Ed. (by Brian W. Kernighan and Dennis M. Ritchie), though on April 19, 2006 Donald Knuth pointed out that this method "was first published by Peter Wegner in CACM 3 (1960), 322. (Also discovered independently by Derrick Lehmer and published in 1964 in a book edited by Beckenbach.)". By the way, one can find many other tricks about bit operations in the aforementioned book.

class Solution {

public int hammingDistance(int x, int y) {

int xor = x ^ y;

int distance = 0;

while (xor != 0) {

distance += 1;

// remove the rightmost bit of '1'

xor = xor & (xor - 1);

}

return distance;

}

}

Complexity Analysis

-

Time Complexity: \mathcal{O}(1)O(1).

-

Similar as the approach of bit shift, since the size (i.e. bit number) of integer number is fixed, we have a constant time complexity.

-

However, this algorithm would require less iterations than the bit shift approach, as we have discussed in the intuition.

-

-

Space Complexity: \mathcal{O}(1)O(1), a constant size of memory is used, regardless the input.

Array - Top Interview Questions[EASY] (1/9) : Java

String - Top Interview Questions[EASY] (2/9) : Java

Linked List - Top Interview Questions[EASY] (3/9) : Java

Trees - Top Interview Questions[EASY] (4/9) : Java

Sorting and Searching - Top Interview Questions[EASY] (5/9) : Java

Dynamic Programming - Top Interview Questions[EASY] (6/9) : Java

Design - Top Interview Questions[EASY] (7/9) - Java

Array - Top Interview Questions[EASY] (1/9) : Python

String - Top Interview Questions[EASY] (2/9) : Python

Linked List - Top Interview Questions[EASY] (3/9) : Python

Trees - Top Interview Questions[EASY] (4/9) : Python

Sorting and Searching - Top Interview Questions[EASY] (5/9) : Python

Dynamic Programming - Top Interview Questions[EASY] (6/9) : Python

Design - Top Interview Questions[EASY] (7/9) - Python